A processor, also known as a central processing unit (CPU), is the brain of a computer. It retrieves and executes instructions stored in memory, and performs basic arithmetic, logic, and input/output operations. A CPU typically has one or more cores, each of which can execute instructions in parallel. The speed of a CPU, measured in gigahertz (GHz), determines how many instructions it can execute per second. The more GHz a CPU has, the faster it can perform tasks. The architecture of a CPU, such as its instruction set and memory hierarchy, also affects its performance.

how it work?

A processor works by fetching instructions from memory, decoding them to determine the operation to be performed, and then executing the instruction.

- Fetch: The CPU retrieves an instruction from memory using the instruction pointer (IP), which contains the memory address of the next instruction to be executed.

- Decode: The instruction is decoded by the instruction decoder, which converts the instruction into a set of signals that the CPU can understand and execute.

- Execute: The CPU then performs the operation specified by the instruction. This can include arithmetic operations (such as addition and multiplication), logical operations (such as AND and OR), and memory access operations (such as loading and storing data).

- Write back: The result of the operation is then written back to memory or a register.

- Finally, the instruction pointer is incremented to point to the next instruction, and the process repeats.

In addition to these basic steps, modern processors also employ various techniques to improve performance, such as pipelining, which allows multiple instructions to be in various stages of execution at the same time, and out-of-order execution, which allows the CPU to execute instructions in a different order than they were fetched to improve the utilization of the processor’s resources.

First Stage Fetch

The fetch stage is the first step in the instruction execution cycle, where the CPU retrieves an instruction from memory using the instruction pointer (IP). The instruction pointer contains the memory address of the next instruction to be executed.

The instruction is fetched from memory and brought into the processor’s instruction queue or instruction buffer. This allows the CPU to begin decoding the instruction while it is fetching the next one.

In modern processors, the instruction fetch unit is often integrated with the instruction cache, which is a small, fast memory that stores frequently used instructions. The instruction cache is used to reduce the number of times the CPU needs to access main memory, which is much slower than the cache. This helps to improve the overall performance of the processor.

Additionally, some processors use branch prediction to predict the target address of a branch instruction before it is executed. This allows the CPU to start fetching instructions from the predicted target address before the branch instruction is executed, reducing the delay caused by a mispredicted branch.

Overall, the fetch stage is critical for the efficient operation of the CPU, as it retrieves the instructions that the processor will execute, and a faster and more efficient fetch stage can help to improve the overall performance of the processor.

Second stage Decode

The decode stage is the second step in the instruction execution cycle, where the CPU decodes the instruction that was fetched in the previous stage. The instruction decoder converts the instruction into a set of signals that the CPU can understand and execute.

The instruction decoder is responsible for interpreting the instruction’s opcode (operation code) and operands (the data on which the instruction operates) and generating the appropriate control signals to execute the instruction. It also determines which registers or memory locations the instruction will read from and write to.

The instruction decoder is a combinational logic circuit that takes the instruction as input and produces the control signals as output. The control signals are used to control the operation of the Arithmetic Logic Unit (ALU) and other components of the CPU.

The decoding process is specific to the instruction set architecture (ISA) of the processor, which defines the set of instructions that the CPU can execute and the format of those instructions.

In modern processors, the instruction decoder is often integrated with the instruction cache, which improves the overall performance of the processor. Additionally, some processors use complex instruction set computing (CISC) or reduced instruction set computing (RISC) architectures, which can simplify the decoding process and improve performance.

Overall, the decode stage is critical for the efficient operation of the CPU as it converts the instruction into a set of signals that the CPU can understand and execute, and a faster and more efficient decoding stage can help to improve the overall performance of the processor.

Third stage execution

The third stage in the instruction execution cycle is the execute stage. In this stage, the CPU performs the operation specified by the instruction that was decoded in the previous stage. This can include arithmetic operations (such as addition and multiplication), logical operations (such as AND and OR), and memory access operations (such as loading and storing data).

The execution of an instruction is controlled by the control signals generated by the instruction decoder, which are used to control the operation of the Arithmetic Logic Unit (ALU) and other components of the CPU.

The ALU is responsible for performing arithmetic and logical operations on data. It takes the operands from registers or memory as input and produces the result of the operation as output. The ALU is a combinational logic circuit that can perform a wide variety of operations, depending on the instruction being executed.

In modern processors, the execution unit may have multiple functional units, such as floating-point unit (FPU) or a digital signal processor (DSP), that can perform specific types of operations more efficiently.

In addition to the instruction execution, the execute stage also includes memory access operations, such as loading data from memory into registers and storing data from registers into memory.

Overall, the execute stage is critical for the efficient operation of the CPU as it performs the operation specified by the instruction, and a faster and more efficient execution stage can help to improve the overall performance of the processor.

Fourth stage Write back:

The fourth stage in the instruction execution cycle is the write-back stage, also known as the memory access stage. In this stage, the results of the operation performed in the execute stage are written back to memory or a register.

In the case of register-to-register instructions, the result is written back to the destination register. This register will be used for the next instruction.

In the case of memory-to-register or register-to-memory instructions, the result is written back to the memory location specified by the instruction. This involves accessing the memory subsystem and can be a slower process than register-to-register instructions.

The write-back stage is also responsible for handling memory consistency, ensuring that all the cores of a multi-core processor, or all the processors in a multi-processor system, have a consistent view of the memory. This is done by using cache coherence protocols.

Overall, the write-back stage is critical for the efficient operation of the CPU as it writes the results of the operation performed in the execute stage, and a faster and more efficient write-back stage can help to improve the overall performance of the processor.

Final stage

The final stage in the instruction execution cycle is the instruction pointer update stage. In this stage, the instruction pointer (IP) is incremented to point to the next instruction, and the process repeats. The IP is a register that holds the memory address of the next instruction to be executed.

After the write-back stage, the IP is updated with the address of the next instruction to be executed. This can be the next instruction in memory (sequential execution), or it can be a branch instruction that jumps to a different memory location.

In the case of conditional branches, the CPU uses the results of the previous instruction’s execution to determine whether or not to change the instruction pointer to the target of the branch. This is called branch resolution.

After the IP is updated, the fetch stage begins again, and the process of fetching, decoding, executing, writing back and updating the IP is repeated until the program is completed or a stop instruction is encountered.

Overall, the instruction pointer update stage is critical for the efficient operation of the CPU as it updates the instruction pointer to point to the next instruction, and a faster and more efficient instruction pointer update stage can help to improve the overall performance of the processor.

types of processor

There are several types of processors, including:

- Central Processing Units (CPUs): The most common type of processor found in personal computers, laptops, and servers. They are responsible for executing instructions and performing basic arithmetic, logic, and input/output operations.

- Graphics Processing Units (GPUs): These processors are designed to handle the complex calculations needed for graphics and video rendering. They are often used in gaming PCs and workstations, as well as in data centers for machine learning and scientific computing.

- Digital Signal Processors (DSPs): These processors are designed to handle the real-time processing of signals such as audio and video. They are often used in telecommunications, audio processing, and image processing.

- Microcontrollers: These are small, low-power processors that are often used in embedded systems, such as appliances, automobiles, and industrial control systems.

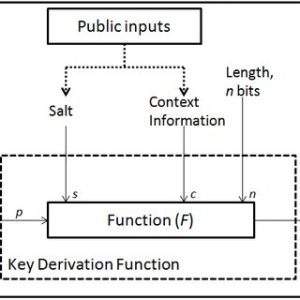

- Application-Specific Integrated Circuits (ASICs): These are custom-designed processors that are built for a specific application, such as bitcoin mining or encryption. They are often used in high-performance computing and cryptography.

- Field-Programmable Gate Arrays (FPGAs): These are programmable processors that can be reconfigured to perform a wide range of functions, making them useful for a variety of applications such as signal processing, networking, and encryption.

Each of these types of processors have their own characteristics and features that make them suitable for specific types of applications and workloads.

how many processors using in smart phones?

Most modern smartphones use a system-on-a-chip (SoC) design, which integrates multiple components, including the processor, memory, and other components, into a single chip. The processor, also known as the central processing unit (CPU), is one of the most important components of a smartphone.

In most smartphones, there are two types of processors:

- The main processor, also known as the application processor, is responsible for running the operating system and the majority of the applications on the device. This is typically a high-performance CPU based on ARM architecture, like Qualcomm Snapdragon or Samsung Exynos.

- The secondary processor, also known as the co-processor, is responsible for handling specific tasks such as handling sensor data, power management, and other tasks. This is typically a low-power CPU, like an ARM Cortex-M series.

So, in summary, most smartphones use a combination of one or two processors (main processor and a co-processor) to manage different tasks and workloads.

architecture for processor

The architecture of a processor refers to the design and organization of its components and how they work together to execute instructions. There are several different processor architectures, including:

- Complex Instruction Set Computing (CISC): CISC processors have a large number of instructions, which can perform complex tasks with a single instruction. These processors are designed to be compatible with a wide range of software, but the larger instruction set can make them less efficient.

- Reduced Instruction Set Computing (RISC): RISC processors have a smaller number of simple instructions that can be executed quickly. These processors are designed to be more efficient, but may require more instructions to perform the same task as a CISC processor.

- x86: This is a CISC architecture that was originally developed by Intel. It is the most widely used architecture for desktop and laptop computers, and is also found in servers and some mobile devices.

- ARM: This is a RISC architecture that was developed by ARM Holdings. It is widely used in mobile devices, such as smartphones and tablets, as well as in embedded systems and Internet of Things (IoT) devices.

- PowerPC: This is a RISC architecture that was developed by IBM, and it’s commonly used in Apple Macintosh computers and some gaming consoles.

- MIPS: This is a RISC architecture that was developed by MIPS Technologies, and it’s commonly used in embedded systems and networking devices.

Each architecture has its own set of instructions, known as the instruction set architecture (ISA), and its own unique features and characteristics that make it suitable for specific types of applications and workloads.

material for processor

Processors are typically made from silicon, which is a semiconductor material. Silicon is the most widely used material for processors because it can be easily manipulated to create transistors, the building blocks of processors.

A processor is made up of millions of transistors, which are tiny switches that can be turned on or off to represent the 1s and 0s of binary data. The transistors are used to create logic gates, which are the basic building blocks of digital circuits. These circuits are used to perform the operations specified by the instructions that the processor executes.

The silicon used to make processors is typically doped, or mixed, with small amounts of other materials, such as boron or phosphorus, to create p-type and n-type semiconductors. These semiconductors are used to create the transistors that make up the processor.

Once the transistors have been created, they are then etched onto a silicon wafer, which is a thin slice of silicon. The wafer is then cut into individual dies, which are the individual processors. These dies are then packaged and soldered onto a circuit board to make the final product.

It’s worth noting that there is ongoing research on alternative materials to silicon for processor, like carbon-based materials, as they could potentially make processors smaller, faster and more energy efficient.

how to choose efficient processor for pc & server

Choosing an efficient processor for a PC or server depends on the specific needs of the system and the types of workloads that it will be running. Here are a few factors to consider when choosing a processor:

- Clock speed: This is measured in gigahertz (GHz) and represents the number of instructions that a processor can execute per second. A higher clock speed generally means that a processor can perform tasks more quickly.

- Core count: This is the number of cores that a processor has. Each core can execute instructions independently, so a processor with more cores can perform more tasks simultaneously.

- Cache: This is a small, fast memory that is built into the processor and is used to store frequently used data. A larger cache can help to improve performance by reducing the number of times the processor needs to access main memory.

- TDP (Thermal Design Power): This is the amount of power that a processor needs to function, measured in watts. A lower TDP generally means that the processor is more energy efficient, which can be important for servers or other systems that are running for long periods of time.

- Instruction set: The instruction set architecture (ISA) defines the set of instructions that a processor can execute. Some ISAs are more efficient than others, and some ISAs are more suited to specific types of workloads.

- The intended use: Different processors are designed for different types of workloads. For example, a gaming PC will require a high-performance processor, while a server will require a processor that can handle multiple threads and large amounts of data.

It’s also important to consider the compatibility of the processor with the motherboard, the power supply and the cooling system, as well as the cost.

summary, when choosing a processor for a PC or server, it’s important to consider the specific needs of the system, the types of workloads that it will be running, and the compatibility with the rest of the components.